Ingress controller with application setup

✅ Install Ingress Controller

✅ Deploy Sample App in a Namespace

✅ Configure Ingress Rule

✅ Map FQDN in /etc/hosts

✅ Access http://sample-app.local

Ingress Controller: This will act as a reverse proxy, routing external traffic to the appropriate service in your cluster.

Service: The backend application or microservice that will handle the request.

Load Balancer (Optional): If you want, you can set up a load balancer for high availability. However, it’s not strictly necessary to test the Ingress controller.

////step : 1 --Install an Ingress Controller

First, we need to deploy an Ingress Controller to handle HTTP/S traffic. A popular one is NGINX Ingress Controller.

Run the following command to install the NGINX Ingress Controller via Helm (you should have Helm installed):

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

helm install ingress-nginx ingress-nginx/ingress-nginx --namespace ingress-nginx --create-namespace

This will deploy the NGINX Ingress Controller in a separate namespace called ingress-nginx.

//before that what is helm

The Helm chart simplifies the deployment process by managing the resources for you (like Deployments, Services, and ConfigMaps), so you don’t have to write them manually.

//install helm

[root@master boobalan]# curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 11913 100 11913 0 0 785 0 0:00:15 0:00:15 --:--:-- 792

[WARNING] Could not find git. It is required for plugin installation.

Downloading https://get.helm.sh/helm-v3.17.1-linux-amd64.tar.gz

Verifying checksum... Done.

Preparing to install helm into /usr/local/bin

helm installed into /usr/local/bin/helm

//this will automatically install helm

[root@master boobalan]# helm version

version.BuildInfo{Version:"v3.17.1", GitCommit:"980d8ac1939e39138101364400756af2bdee1da5", GitTreeState:"clean", GoVersion:"go1.23.5"}

[root@master boobalan]# helm repo list

Error: no repositories to show

[root@master boobalan]# helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

"ingress-nginx" has been added to your repositories

[root@master boobalan]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "ingress-nginx" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@master boobalan]# helm repo list

NAME URL

ingress-nginx https://kubernetes.github.io/ingress-nginx

//install

[root@master boobalan]# helm install ingress-nginx ingress-nginx/ingress-nginx --namespace ingress-nginx --create-namespace

Error: INSTALLATION FAILED: failed pre-install: 1 error occurred:

* timed out waiting for the condition

///error let's go one by one actully "ingress-nginx --create-namespace" this will automatically create a namespace called ingress-nginx

//ok it working

[root@master boobalan]# kubectl get namespace ingress-nginx -o wide

NAME STATUS AGE

ingress-nginx Active 24m

[root@master boobalan]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-5q9tf 0/1 Completed 0 22m

[root@master boobalan]# kubectl describe pod ingress-nginx-admission-create-5q9tf -n ingress-nginx

Name: ingress-nginx-admission-create-5q9tf

Namespace: ingress-nginx

Priority: 0

Service Account: ingress-nginx-admission

Node: worker1.boobi.com/192.168.198.141

Start Time: Sun, 23 Feb 2025 11:53:31 +0100

//to check the helm log

[root@master boobalan]# helm status ingress-nginx -n ingress-nginx

NAME: ingress-nginx

LAST DEPLOYED: Sun Feb 23 11:51:02 2025

NAMESPACE: ingress-nginx

STATUS: failed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

///still i don't see the deployment for ingress-nginx and there is error on the pod as well

/////Pod error

W0223 10:58:46.075747 1 client_config.go:667] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

{"err":"secrets \"ingress-nginx-admission\" not found","level":"info","msg":"no secret found","source":"k8s/k8s.go:229","time":"2025-02-23T10:58:48Z"}

{"level":"info","msg":"creating new secret","source":"cmd/create.go:28","time":"2025-02-23T10:58:48Z"}

////fix

#helm upgrade --install ingress-nginx ingress-nginx/ingress-nginx --namespace ingress-nginx --create-namespace --set controller.admissionWebhooks.enabled=true

///Ensure that the Helm values for the Ingress controller are correct, especially the settings related to admission webhooks. The flag controller.admissionWebhooks.enabled should be set to true.

///now verify the above setup

#helm show values ingress-nginx/ingress-nginx

//now the ingress-controller ready

kubectl get deployments -n ingress-nginx

NAME READY UP-TO-DATE AVAILABLE AGE

ingress-nginx-controller 1/1 1 1 164m

////basic helm commands

helm repo add <name> <url>

helm repo update

helm repo remove <name>

/////once done the installation of ingress-controller

step 2: create sample application

//You can create a simple HTTP application that can be exposed via an Ingress. Here's an example of a basic nginx-based app:

///Namespaces in Kubernetes are logical separations within the cluster. Think of them as separate environments within the same Kubernetes cluster.

ingress-nginx namespace → This is where the Ingress Controller runs. It manages traffic routing but does not run application services.

Default (default) namespace → If you don't specify a namespace, Kubernetes places resources in the default namespace.

Custom namespaces → You can create your own namespace for better organization.

//create our own namespace and insdie that create our sample application and route the application traffic trough the ingress controller

//create namespace

[root@master boobalan]# kubectl create namespace akilan

namespace/akilan created

#vi sample-app.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-app

namespace: akilan # <-- Define the namespace here

labels:

app: sample-app

spec:

replicas: 1

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: sample-app

namespace: akilan # <-- Define the namespace here

spec:

selector:

app: sample-app

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

[root@master akilan]# kubectl apply -f sample-app.yaml

deployment.apps/sample-app created

service/sample-app created

[root@master akilan]# kubectl -n akilan get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

sample-app ClusterIP 10.105.14.150 <none> 80/TCP 101s

[root@master akilan]# kubectl -n akilan get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

sample-app 1/1 1 1 112s

[root@master akilan]# kubectl get pods -n akilan

NAME READY STATUS RESTARTS AGE

sample-app-5f4b888ff9-l952q 1/1 Running 0 14m

//now we see the sample app running and there is cluster-ip , that is only for internal communication

[root@master akilan]# curl http://10.105.14.150

<!DOCTYPE html>

<html>

<head>

[root@master akilan]# kubectl -n akilan exec -it sample-app-5f4b888ff9-l952q -- curl http://10.105.14.150

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

///now we need to access this from outise either ingeress or normal -> that means using nodeport like update the service using node port and configure the enpoits that will make available this service outside using the cluster ip:3132 like that

//that methode already availabe in the previous blog.

//here we going to test this using ingress controller

step 3: create ingress resources

need to define an Ingress resource to expose your service externally via HTTP/S. Here's an example of how you can expose the sample-app via the Ingress controller:

[root@master akilan]# vi sample-app-ingress.yaml

[root@master akilan]# cat sample-app-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: sample-app-ingress

namespace: akilan

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx # <-- Add this line

rules:

- host: sample-app.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: sample-app

port:

number: 80

[root@master akilan]# kubectl apply -f sample-app-ingress.yaml

ingress.networking.k8s.io/sample-app-ingress created

[root@master akilan]# kubectl -n akilan get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

sample-app-ingress <none> sample-app.local 80 32s

[root@master akilan]# kubectl get service -n akilan

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

sample-app ClusterIP 10.105.14.150 <none> 80/TCP 40m

[root@master akilan]# kubectl get service -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.99.11.4 <pending> 80:31989/TCP,443:31498/TCP 3h40m

ingress-nginx-controller-admission ClusterIP 10.111.198.94 <none> 443/TCP 3h40m

///need to expose the Ingress Controller using a NodePort so it can be accessed from outside the cluster.

[root@master akilan]# kubectl patch svc ingress-nginx-controller -n ingress-nginx -p '{"spec": {"type": "NodePort"}}'

service/ingress-nginx-controller patched

//now check the service of ingress controller

[root@master akilan]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.99.11.4 <none> 80:31989/TCP,443:31498/TCP 3h45m

ingress-nginx-controller-admission ClusterIP 10.111.198.94 <none> 443/TCP 3h45m

//now they type changed from loadbalancer to nodeport,Here, 31989 is the dynamically assigned NodePort for HTTP traffic.

//now check the ip's of our node

[root@master akilan]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master.boobi.com Ready control-plane 16h v1.29.14 192.168.198.140 <none> Rocky Linux 9.5 (Blue Onyx) 5.14.0-503.26.1.el9_5.x86_64 containerd://1.7.25

worker1.boobi.com Ready <none> 14h v1.29.14 192.168.198.141 <none> Rocky Linux 9.3 (Blue Onyx) 5.14.0-362.24.1.el9_3.0.1.x86_64 containerd://1.7.25

//now access the site via //192.168.198.140:31498

//edit host file 192.168.198.140 sample-app.local

//then access - http://sample-app.local

///here I am seeing 404 nginx error let's fix this issue

//Your Ingress is missing a class, which tells Kubernetes which Ingress Controller to use.

[root@master akilan]# kubectl get ingress -n akilan

NAME CLASS HOSTS ADDRESS PORTS AGE

sample-app-ingress <none> sample-app.local 80 19m

[root@master akilan]# kubectl patch ingress sample-app-ingress -n akilan -p '{"spec":{"ingressClassName":"nginx"}}'

ingress.networking.k8s.io/sample-app-ingress patched

[root@master akilan]# kubectl get ingress -n akilan

NAME CLASS HOSTS ADDRESS PORTS AGE

sample-app-ingress nginx sample-app.local 80 21m

[root@master akilan]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-5f649bbff8-v42t7 1/1 Running 2 (81m ago) 3h54m

[root@master akilan]#

[root@master akilan]# kubectl get endpoints -n akilan

NAME ENDPOINTS AGE

sample-app 10.0.1.218:80 59m

///to test this into locally

[root@master akilan]# kubectl exec -it ingress-nginx-controller-5f649bbff8-v42t7 -n ingress-nginx -- curl -I http://sample-app.akilan.svc.cluster.local

HTTP/1.1 405 Not Allowed

date: Sun, 23 Feb 2025 15:27:22 GMT

content-type: text/html

content-length: 154

server: Parking/1.0

////The "405 Not Allowed" response means that your Ingress Controller is reaching the service, but the service is not handling the request properly.

This usually happens because:

1️⃣ The application inside the Pod is rejecting the request.

2️⃣ The service is misconfigured (wrong port, missing endpoints).

3️⃣ The Ingress rule is not forwarding traffic correctly.

///test in the pod itself

[root@master akilan]# kubectl exec -it sample-app-5f4b888ff9-l952q -n akilan -- curl -I http://localhost:80

HTTP/1.1 200 OK

Server: nginx/1.27.4

Date: Sun, 23 Feb 2025 15:29:15 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Wed, 05 Feb 2025 14:46:11 GMT

Connection: keep-alive

ETag: "67a379b3-267"

Accept-Ranges: bytes

//after update the host file it's working fine

http://sample-app.local:31989/

/////now the details explanation

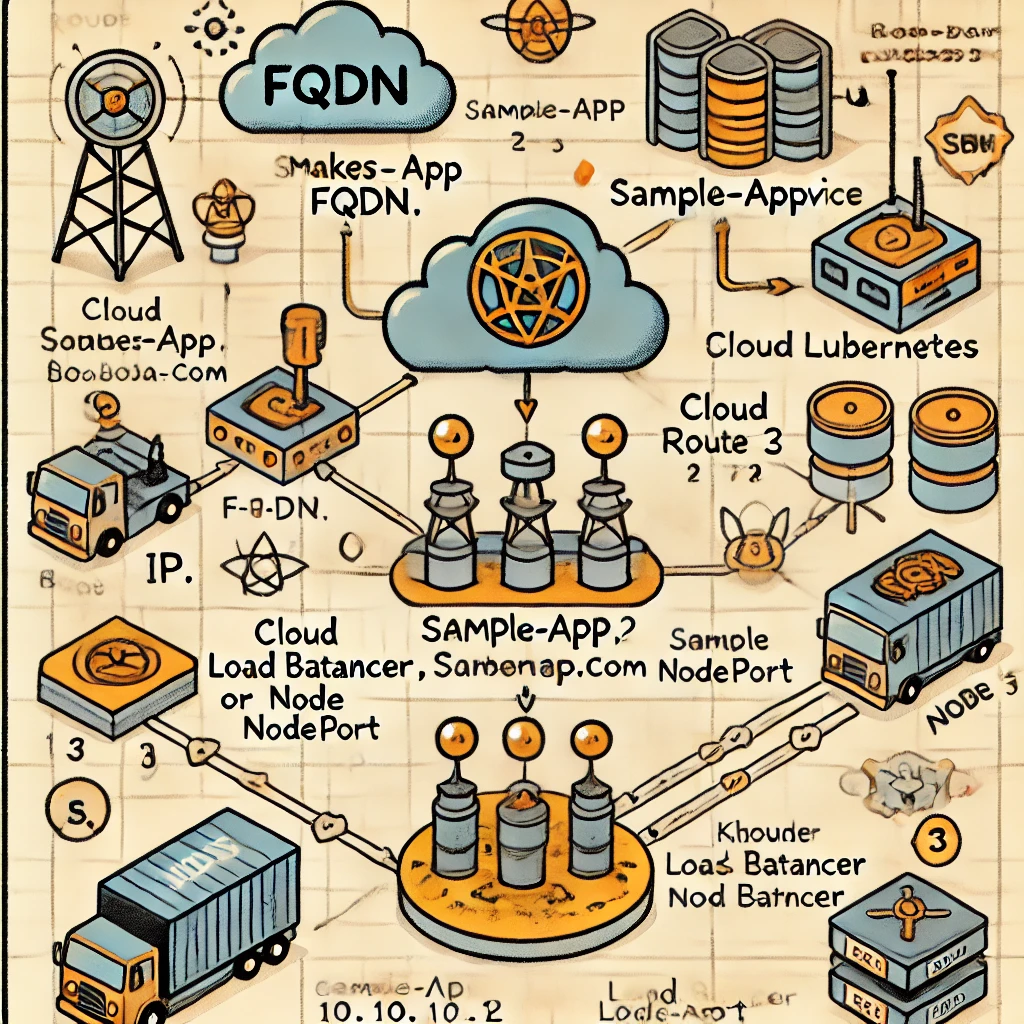

External Request

|

v

+-------------------+ +--------------------+

| Ingress Controller|<----------->| Ingress Resource|

| (NGINX/Traefik) | uses rules | (sample-app-ingress)|

+-------------------+ +--------------------+

|

v

+------------------+

| Service (ClusterIP)|

| (sample-app) |

+------------------+

|

v

+------------------+

| Deployment Pods |

| (sample-app) |

+------------------+

|

v

Response Back to User

//Let's go one by one, initially the request landing to ingess controller - ingeress controller we can assess via our master ip, but when it was in load balancer type we can't access, hence we changed the type into Nodeport, once it done we can reach till ingress controller by using master ip and nodeport.

//but we can't access the app, because the app access willbe based on the ingress resource that we configured.

//Ingress Controller: This is the entry point for external traffic into your Kubernetes cluster. It listens for requests and forwards them to the appropriate services based on the rules defined in the Ingress resource.

//NodePort: Changing the Ingress controller's service type to NodePort allows access from outside the cluster by exposing the Ingress controller on a specific port on all cluster nodes.

//You can access it through the NodeIP:NodePort

ingress-nginx-controller:

This is the main deployment for the NGINX Ingress controller. It’s responsible for handling and routing external HTTP/S traffic to the appropriate services within the Kubernetes cluster.

It watches for changes to the Ingress resources in the cluster and configures NGINX based on the routing rules defined in those resources.

ingress-nginx-controller-admission:

This is a webhook used by Kubernetes to validate and mutate Ingress resources when they are created or updated.

It helps to ensure that the Ingress resources are configured correctly, such as validating that the paths, services, or backends are valid before accepting the resource.

This webhook ensures that the Ingress resources are processed by the NGINX controller in a valid way.

////next it go to ingress resources, then redirect to the service which we created, and reced the pod where it running and the pod directly responded to the end user.

Key Concepts

Deployment:

A deployment is a Kubernetes resource that manages your application’s pods (containers). It ensures that a specified number of replicas of your application are running. It’s the actual code for your app.

Service:

A service provides a stable endpoint (IP address and DNS name) for accessing the application. It abstracts access to a group of pods and balances the traffic to them. In your case, the sample-app service exposes your app pods over a specific port.

Ingress Controller:

The Ingress Controller is a component responsible for processing Ingress resources. It acts as a reverse proxy and load balancer that routes external HTTP/HTTPS traffic to your services inside the cluster based on Ingress rules. The Ingress controller is usually installed using Helm to simplify configuration and management.

Ingress:

An Ingress defines the HTTP routing rules for the Ingress controller. It specifies how requests should be routed to services based on the request URL, host, or paths.

Why Use Helm for Ingress Controller Installation?

Helm is a package manager for Kubernetes that helps with the installation, configuration, and management of Kubernetes applications. Installing the Ingress controller using Helm simplifies the process because:

It configures multiple components (such as deployment, service, configmap, etc.) in a single command.

It provides easy upgrades and rollbacks.

You can manage configurations via values.yaml and templates.

You could install the Ingress controller manually, but Helm automates and manages these steps more efficiently, especially as the complexity of your cluster grows.

How It All Connects

Here’s how each component interacts with one another:

Deployment creates the pods running the application (sample app).

Service exposes these pods internally, making them accessible via an IP and port within the cluster (ClusterIP).

The Ingress Controller (like NGINX Ingress) listens for incoming traffic on specific ports (80, 443) and uses Ingress resources to know where to route the traffic.

An Ingress resource defines rules for the Ingress controller. These rules tell the controller which service to route requests to, based on the request’s URL or host.

How Traffic Flows

Request comes to the cluster:

External traffic hits the Ingress Controller (NGINX, Traefik, etc.) on NodePort or LoadBalancer IP (depending on your setup).

Ingress Controller examines Ingress rules:

The Ingress Controller looks at the Ingress resources to determine how to route traffic.

Ingress Controller routes to the correct service:

Based on the host and path, the Ingress Controller forwards the request to the appropriate service inside the cluster (like sample-app service).

Service forwards to the correct pods:

The service sends the request to one of the pods in the deployment that is serving the app (sample-app pods).

App processes and returns the response:

The app processes the request and sends the response back via the service, through the Ingress Controller, to the external user.

In-Depth Explanation of NodePort, ClusterIP, and Ingress

ClusterIP:

The ClusterIP service type is used for internal communication between the pods. It is assigned an internal IP address, and other services in the cluster can access it using this IP.

NodePort:

The NodePort service type opens a specific port on all nodes of the cluster. It allows external traffic to reach the service using <NodeIP>:<NodePort>. This is commonly used for exposing services without a load balancer.

Ingress:

The Ingress is the rule for routing external traffic (HTTP/HTTPS) to internal services.

It acts as an entry point for HTTP/HTTPS traffic into your cluster.

In your setup, the Ingress controller (NGINX) reads the Ingress resource to determine where to send incoming traffic based on the rules defined (like sample-app.local).

Pros & Cons of NodePort vs Ingress:

NodePort:

Pros: Simple, no need for an Ingress Controller.

Cons: Not as flexible for routing multiple applications; you need to manage ports manually, and if you have multiple services, you'd need to manage separate ports for each.

Ingress:

Pros: Centralized management for routing multiple services, handles URL routing, SSL termination, etc.

Cons: Slightly more complex setup since you need an Ingress Controller.

This is my ingress controller

This is the my sample app

///my own infrastructure

EXTERNAL-IP is the Load Balancer's IP.//once we create a property on akamai we get akamai.edge hostmae like sample-app.boobalan.com.akamaiedge.net. that we have to put on our route 53 cname record

Load Balancer (LB) vs. Ingress

| Feature | Load Balancer (LB) | Ingress |

|---|---|---|

| IP Address | Each service gets a separate public IP (if using a LoadBalancer type service) | One shared IP for all services |

| Scope | Exposes only one service per LB | Routes traffic to multiple services |

| Cost | More expensive (each service needs an LB in cloud) | Cost-efficient (one Ingress for all services) |

| Use Case | Directly forwards traffic to a single service | Routes traffic based on URL/Host to different services |

| Control | Less flexible (just forwards traffic) | More flexible (can handle routing, TLS, authentication, etc.) |

0 Comments